The fundamental flaws of AI

The invention of large language models (LLMs) has profoundly shaped the landscape of AI, sparking another wave of hype in the media and the industry with voices even speculating about the emergence of general artifical intelligence. What often goes overlooked in these optimistic accounts, however, is the fact that the challenges of AI to engineer general artificial intelligence are more fundamental than commonly assumed.

One of the main reasons why AI faces such fundamental problems lies in the flaws within the discipline's core research questions. Generally speaking, AI research evolves around two key inquiries. The first research question focuses on the problem of what intelligence is and how it can be defined. While the first endeavor is shared with such disciplines as psychology, philosophy, education or neuroscience, the second fundamental research question is unique to AI and asks how intelligence can be engineered.

The problem, however, is that there is no clear answer to the first research question 'What is intelligence?'. How can we engineer something that we struggle to define? When engineers design a car, they work with a clear definition of what a car is. In AI, by contrast, creating an intelligent system is far more ambiguous because intelligence itself lacks a universally accepted definition.

To navigate this dilemma, AI engineers adopt a pragmatic approach by defining AI based on its ability to perform tasks associated with human intelligence, which essentially means that AI is designed to mimic human behavior. Take ChatGPT, for instance. Strictly speaking, ChatGPT is not capable of reasoning or truly understanding, it is just very good at imitating human intelligence based on complex statistics. LLMs don't comprehend the responses they generate, they basically just calculate probabilities of words occuring in a sequence based on user input and previously generated text.

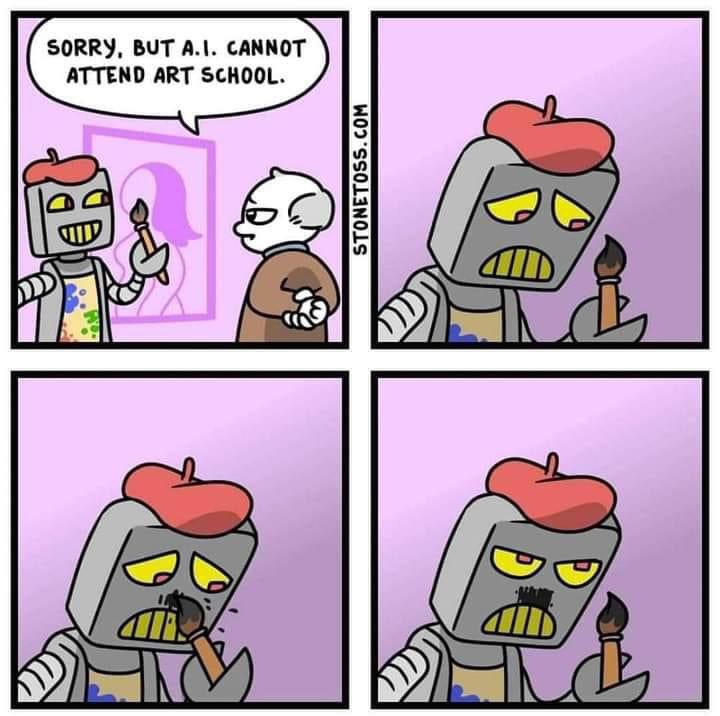

The lack of a clear understanding of what intelligence is, combined with the emphasis on imitation, raises critical issues that remain largely unaddressed. Just because an AI agent appears intelligent, does not mean it truly is. A parrot can imitate human speech, but it doesn't understand human language. Similarly, an actor, who plays Albert Einstein in a movie, might perfectly imitate his mannerisms, yet lack any understanding of his theories. Imitating intelligence is not the same as possessing it.

This ambiguity extends to questions about emotional, ethical or social intelligence. Should these be considered essential components of artificial intelligence? And if so, how could they be implemented in AI systems? It is certainly true that emotions involve all sorts of cognitive processes, but they also require neurotransmitters and hormones such as Dopamine, Serotonin, Oxytocin, Cortisol or Adrenaline. While the cognitive aspects of emotions could be simulated, the biochemical dimension of emotional or social intelligence remains a challenge. Emotional intelligence, therefore, can only be approximated or imitated, but not truly engineered.

Similar obstacles arise with other unique human characteristics that influence human decision making such as free will, conscience or value judgements. Humans have free will, which means they can control and constrain their impulses, pursue their own goals and make autonomous decisions. However, human goals are neither fixed nor uniform. Billions of individuals wake up everyday, pursuing different goals and preferences shaped by ever-changing internal and external factors. Some goals shift over time due to rational choices, while others change inexplicably, influenced by emotion, experience or sheer unpredictability. Just as with emotional intelligence, no one knows how to artificially engineer free will or moral reasoning. At best, these aspects of intelligence can only be replicated in appearances - never fully realized.

The fundamental issues outlined above have made the field lose its magic for me. Nevertheless, AI remains an essential part of my work and product portfolio, though I now approach it from a more practical perspective. AI is meant to solve specific, often narrow problems at various levels, while the vision of a machine with true general human intelligence, at least in the strict sense, still seems to be in the far distance.